Project Settings Editor Tool

This tool helps configure the many project settings required for a VR project. For example: using Android build, OpenGLES3, GPU Skinning, texture compression, and of course disabling the Unity splash screen. This helps streamline the setup process and is easy to refer to in a pinch. It has two different modes for development or final build, which can result in faster workflows between testing/production.

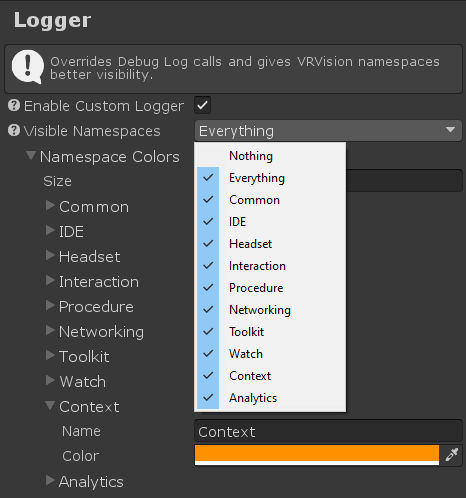

Custom Logger

Since projects can contain hundreds of different scripts, the console can be cluttered with calls from many systems. This can hinder debugging. One solution is removing debug messages from SDK scripts entirely, which is NOT a good idea since some systems can’t be navigated without some essential prints. Another solution is to wrap every debug in an if statement, however that would be a clunky and horrible precedent for the SDK.

My custom logger is a DLL that non-intrusively intercepts debug messages from scripts within the SDK and inclusively filters from selective namespaces (with an option to color code). This is all located in the project settings, making the process centralized and simple. No need to alter scripts or comment/uncomment lines.

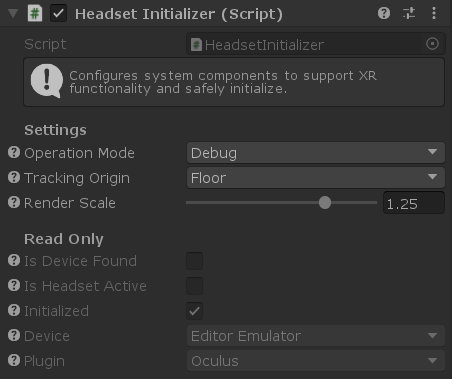

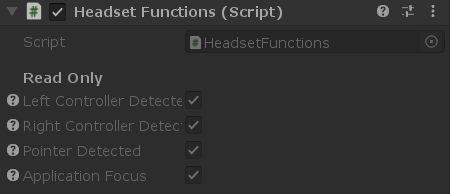

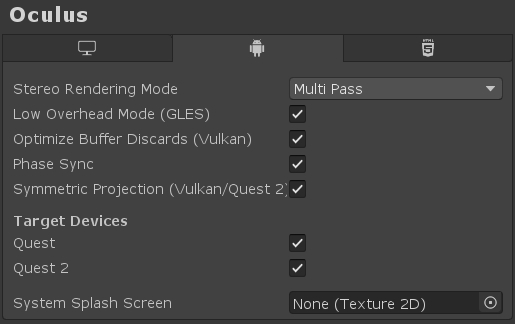

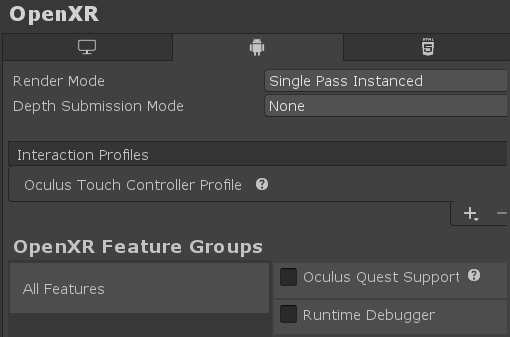

Headset System

The Headset System is an adaptor for the SDK to work with different XR plugins (or without one). This keeps the systems device agnostic and takes advantage of key features from different plugin providers. This way, I can strategically tailor development and builds to the following:

- Rapidly develop and test using Editor Emulator

- Use Oculus Integrations to test live in editor via headset

- Build on Oculus Integrations for better performance settings

- Build on OpenXR Plugin for universal headset support

- Support future configurations

Architecturally, the Headset System unifies many Unity systems & external plugins and interfaces them to provide a sound development environment. For context, VR development wasn’t as standardized before so it was incredibly difficult to develop without things breaking. Behind the scenes, the Headset System is constantly checking if any configurations becomes invalid. This made development much more stable, and allowed my developers to focus more on developing and less debugging.

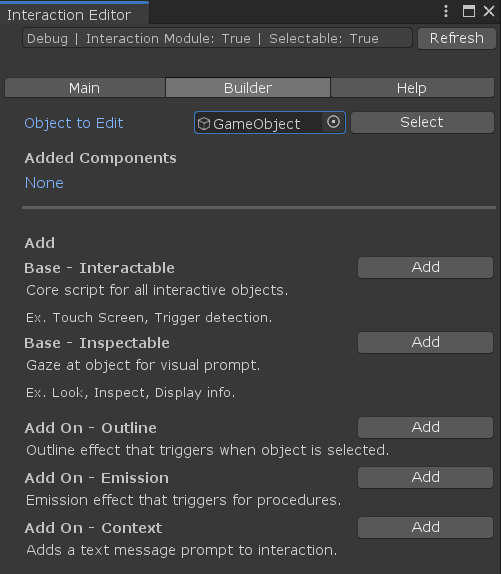

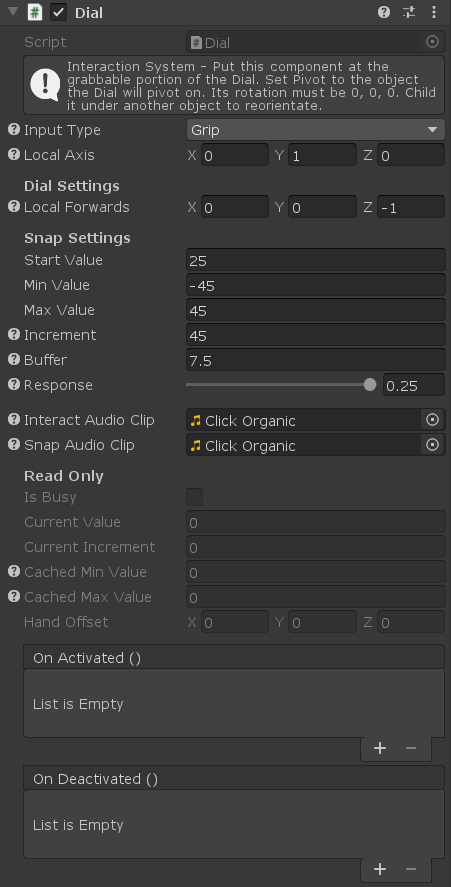

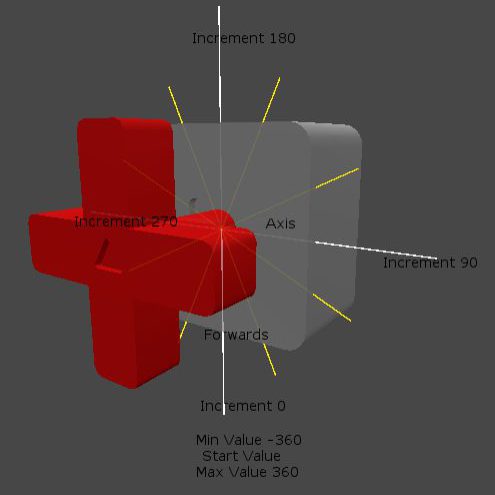

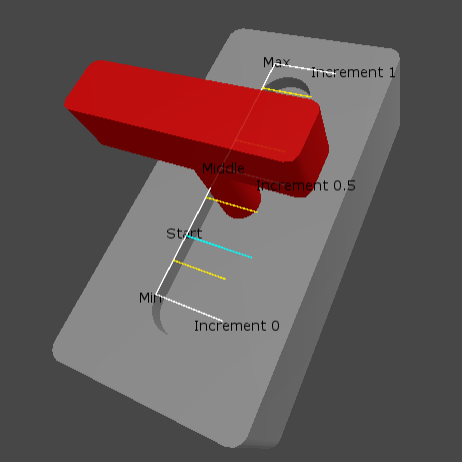

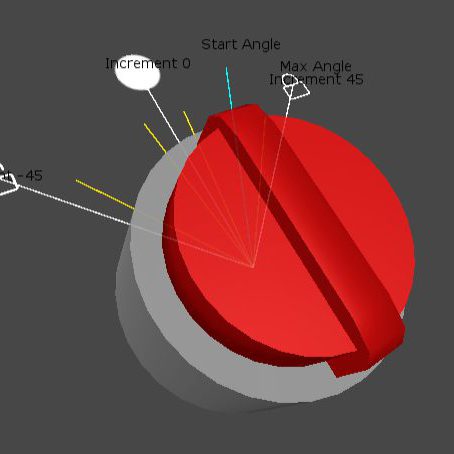

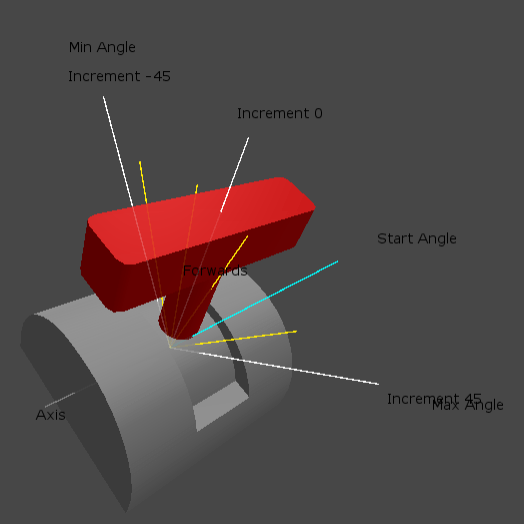

Interaction System

Comprehensive interaction system built from the ground up. Robust input controllers for detection handling and input mapping. Composition based components to build any type of interactable object. Touching, clicking, grabbing, turning, sliding, etc. Additional cues such as outlines, haptic feedback, and sound effects are layered on to provide visual, tactile, and auditory feedback, allowing players to better immerse and engage with the virtual environment.

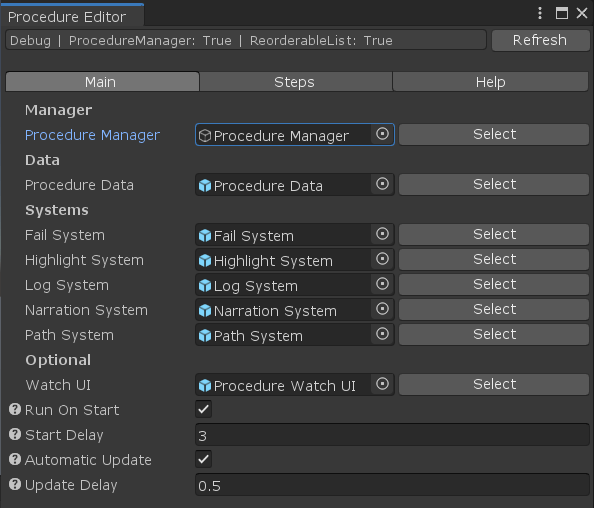

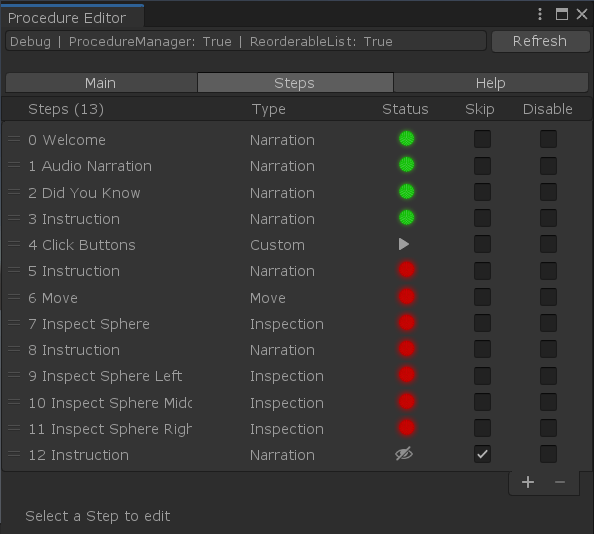

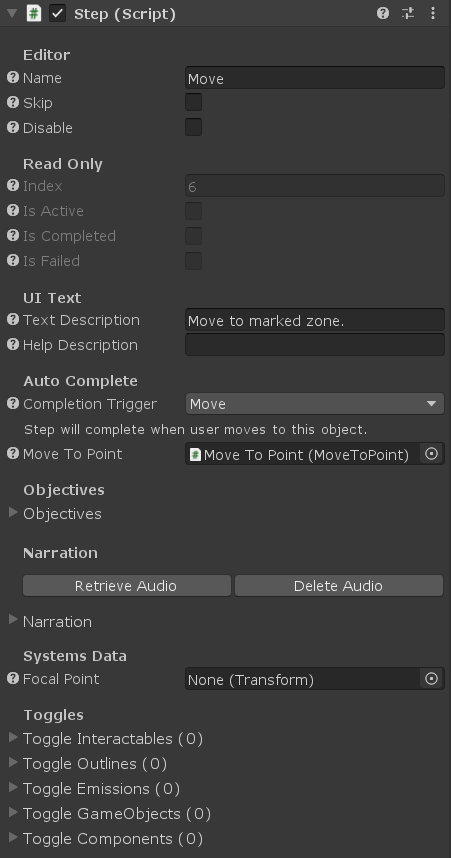

Procedure System

The Procedure System automates a lot of the tedious processes. At a high level, it manages the state and progression of steps with a data driven approach. Every step is treated as a data container. Sub-systems such as narrations, subtitles, waypoints, analytics, etc. will read data from the active step.

Building custom scenarios involves a lot of moving parts. For example, the player performs step 1 and moves onto step 2. But behind the scenes, we need to wait 1 second, play some sounds, change the UI text, toggle some game objects, and trigger some events. Imagine this for 50 steps – It’s a fair amount of work to do without the Procedure System.

Custom content and behaviors can be easily added and integrated. The order of steps can be completed in sequence or parallel via StepGroups. Common tasks such as narrations, inspections, and move-to will autocomplete themselves and proceed to the next step.